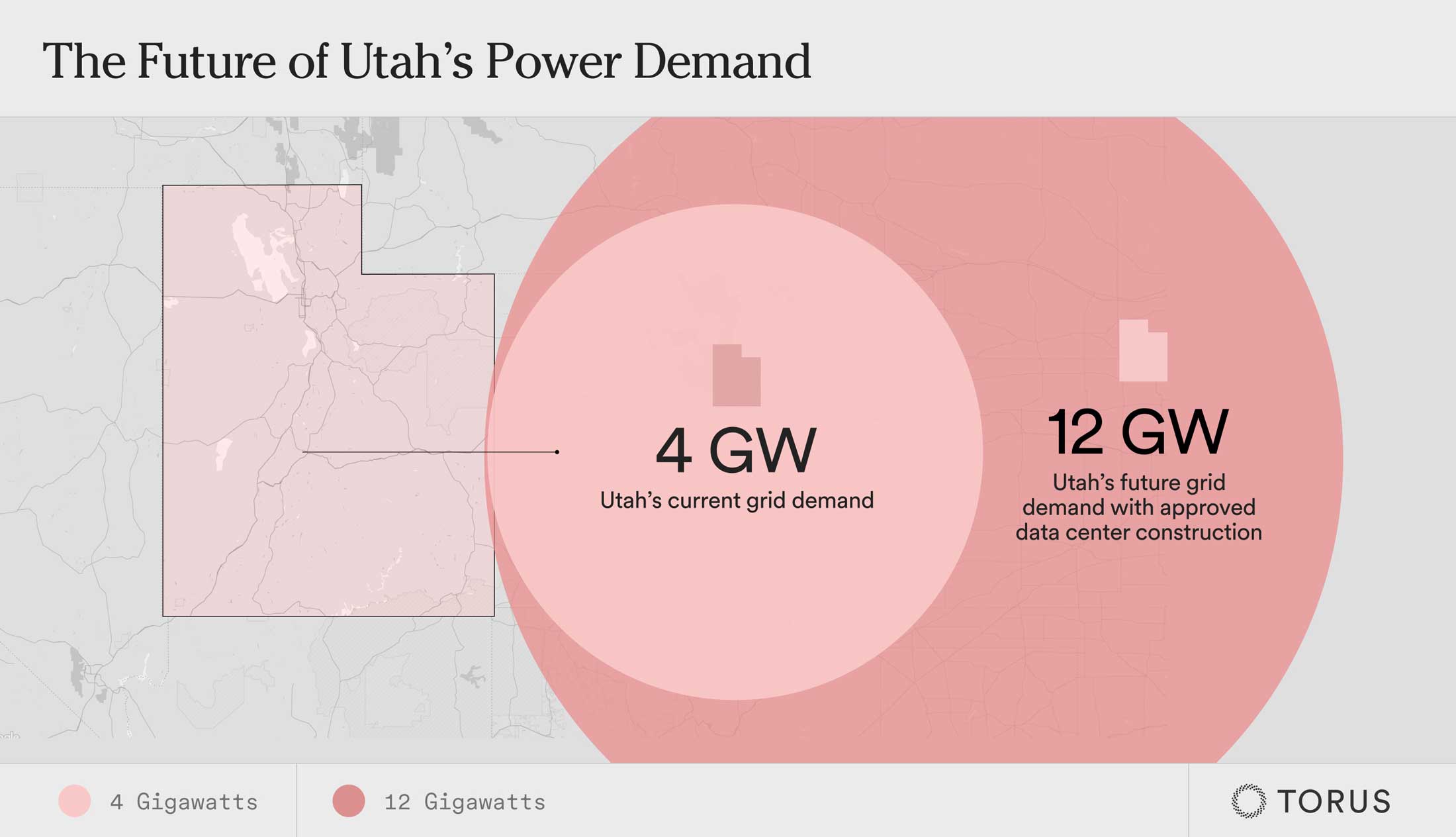

Last week, I encountered a piece of data that really conveyed just how big of an energy demand problem we’ll face in the coming years.

The overall grid demand for the entire state of Utah sits between 3.5 and 4 GW of electricity. If every new data center being proposed in the state was constructed, that number would rise to 12 GW, effectively tripling overall electricity demand.

Utah’s position isn’t exactly unique. As the rise of AI continues to fuel a data center boom, states and regions around the country are seeing power needs balloon—a trend that’s likely to only increase over the coming years.

For example, every 15 queries from OpenAI’s Dall-E to generate an AI image uses enough electricity to fully charge your smartphone. In fact, OpenAI’s ChatGPT alone consumes around 1 GWh a day, enough electricity to power 33,000 homes.

How much power do data centers actually use?

There are roughly 5,400 data centers in the United States. These 5,400 buildings already account for 3% of the country’s overall electricity consumption. To frame it another way, data centers in Northern Virginia, the United States’ leading market, require enough electricity to power 800,000 houses which equates to more than a quarter of all homes located in the state. With the rise of AI, this already significant power demand is set to skyrocket.

For example, every 15 queries from OpenAI’s Dall-E to generate an AI image uses enough electricity to fully charge your smartphone. In fact, OpenAI’s ChatGPT alone consumes around 1 GWh a day, enough electricity to power 33,000 homes.

As a general rule, the larger and more sophisticated an AI model, the more power it requires to operate. Already, there’s talk within the industry of training 1 gigawatt models. As models grow larger and AI adoption spreads, we’re likely to see the need for computational power (and, therefore, electricity) grow exponentially. And, that’s to say nothing of the continued proliferation of IoT devices, streaming services, and smartphones, all of which rely on data centers. All told, data centers are anticipated to require 8% of total US power demand by 2030.

Do we have enough energy to meet rising demand?

Such a significant increase in power demand begs the question—where is this electricity going to come from? Already, leaders in the AI and data center space are voicing concerns over potential roadblocks due to insufficient energy supply:

“I actually think before we hit [computing constraints], we’ll run into energy constraints.” - Mark Zuckerberg, CEO @ Facebook

“I think we still don’t appreciate the energy needs of [AI]. We need fusion, or we need, like, radically cheaper solar plus storage, or something, at massive scale—like, a scale that no one is really planning for.” - Sam Altman, CEO @ OpenAI

“We’re kind of running out of power in the next 18 to 24 months.” - Mark Ganzi, CEO @ DigitalBridge

Energy constraints aren’t just a topic of conversation for industry leaders. We’re already seeing concrete examples of data center construction slowing due to dwindling supply. In 2022, Dominion Energy paused data center connections in Northern Virginia, illustrating that even leading geographic markets are struggling to keep pace with demand. While connections have since been resumed, the region is now hurriedly investing in new transmission infrastructure while also considering increasing zoning requirements for new facilities. More recently, Silicon Valley Power began limiting many proposed data centers in Northern California to a maximum electricity allocation of 2 MW.

What’s the solution to all of this?

Data centers and AI face an infrastructure challenge. To accommodate more data centers, we’ll need more power plants, transmission lines, substations, renewable energy farms, and more. Infrastructure projects aren’t easy to stand up quickly. They’re often prone to construction delays and lengthy permitting hurdles. This period of rising electricity demand also happens to coincide with a push to decommission coal power plants, further putting a squeeze on utility companies.

Ultimately, there’s no one solution to this problem. To solve it, we’ll need a whole lot of new power generation coming from multiple sources—wind, solar, thermal, nuclear, and natural gas among them. We’ll also need permitting reform to enable us to build new power plants and transmission infrastructure far faster than our current pace. However, legislation often doesn’t operate quickly enough to meet our needs in the present moment.

A new model for expanding grid capacity

I’m really excited about the approach to solving this problem we’re taking at Torus. Rather than waiting for federal, state, and local governments to change permitting and zoning requirements to enable faster grid expansion, we’ve come up with a bit of a workaround. In broad strokes, here’s how it works:

- You expand grid capacity quickly but incrementally by installing Torus systems on commercial buildings, turning them into individual points of power generation and storage.

- You virtually link these individual systems together using Torus Lasso™, giving utility companies an aggregated network of energy generation and storage they can call upon to ease congestion, smooth frequency issues, and meet spikes in demand.

There’s a lot more that we’ve built and engineered to make this such an effective VPP solution. I’ll be diving into all the nitty-gritty details more in the coming weeks. In the meantime, I encourage you to check out our commercial product or explore the relationship between power demand and AI further. It’s a really interesting topic and one with serious implications for our future. For all the questions swirling around AI, it might all come down to whether or not we can get enough lines and poles in the ground.

More to come next week! Stay tuned :)

You May Also Like

In The News

2025 Wrap-Up and Look Ahead to 2026

From 99.99% uptime to 500 MW in utility partnerships to opening our new GigaOne manufacturing and assembly facility to growing our team to nearly 200 strong, we're accelerating the deployment of resilient power systems for our customers.

Press Release

Torus Names Bill Comeau as Chief Utility Officer to Advance Utility Partnerships

With extensive experience across the energy sector, Comeau will lead Torus’ efforts to co-create new utility programs that enhance reliability and resilience.